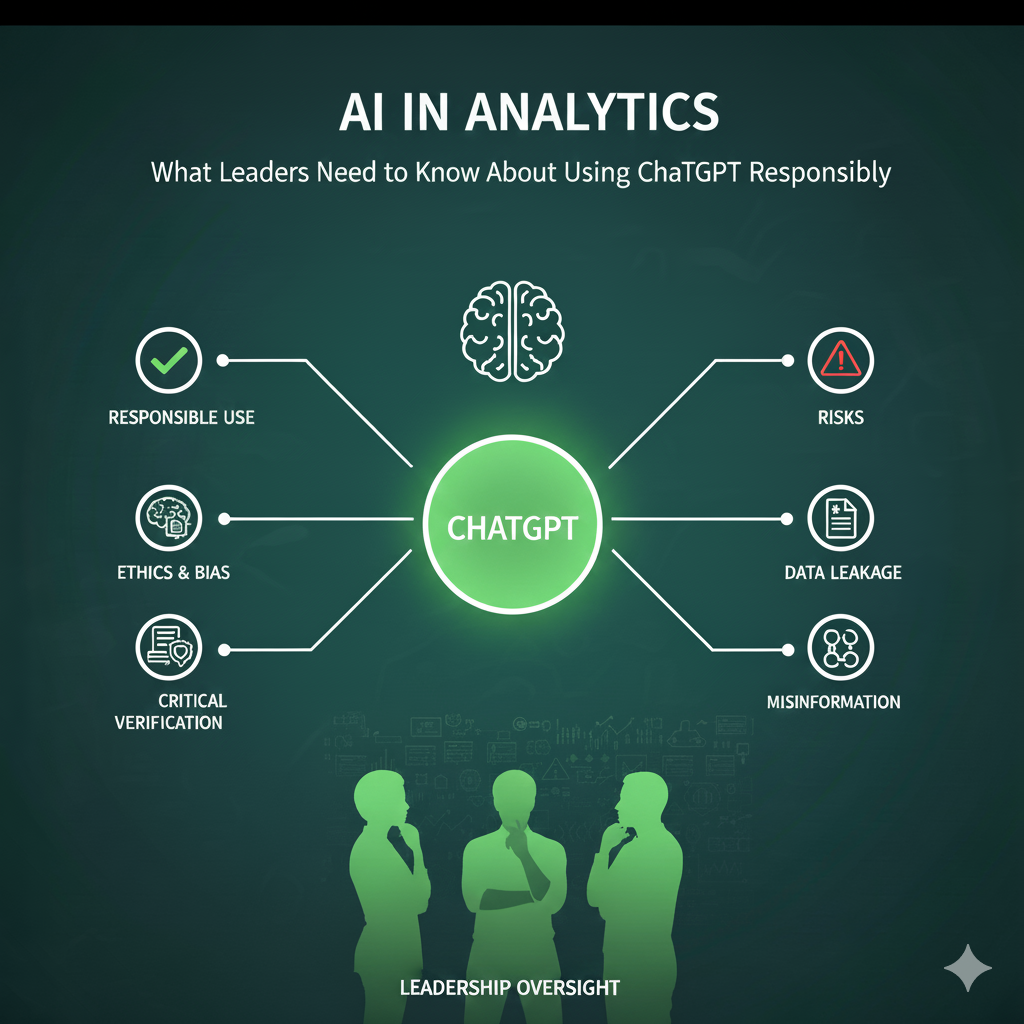

AI in Analytics: What Leaders Need to Know About Using ChatGPT Responsibly

AI in Analytics: What Leaders Need to Know About Using ChatGPT Responsibly

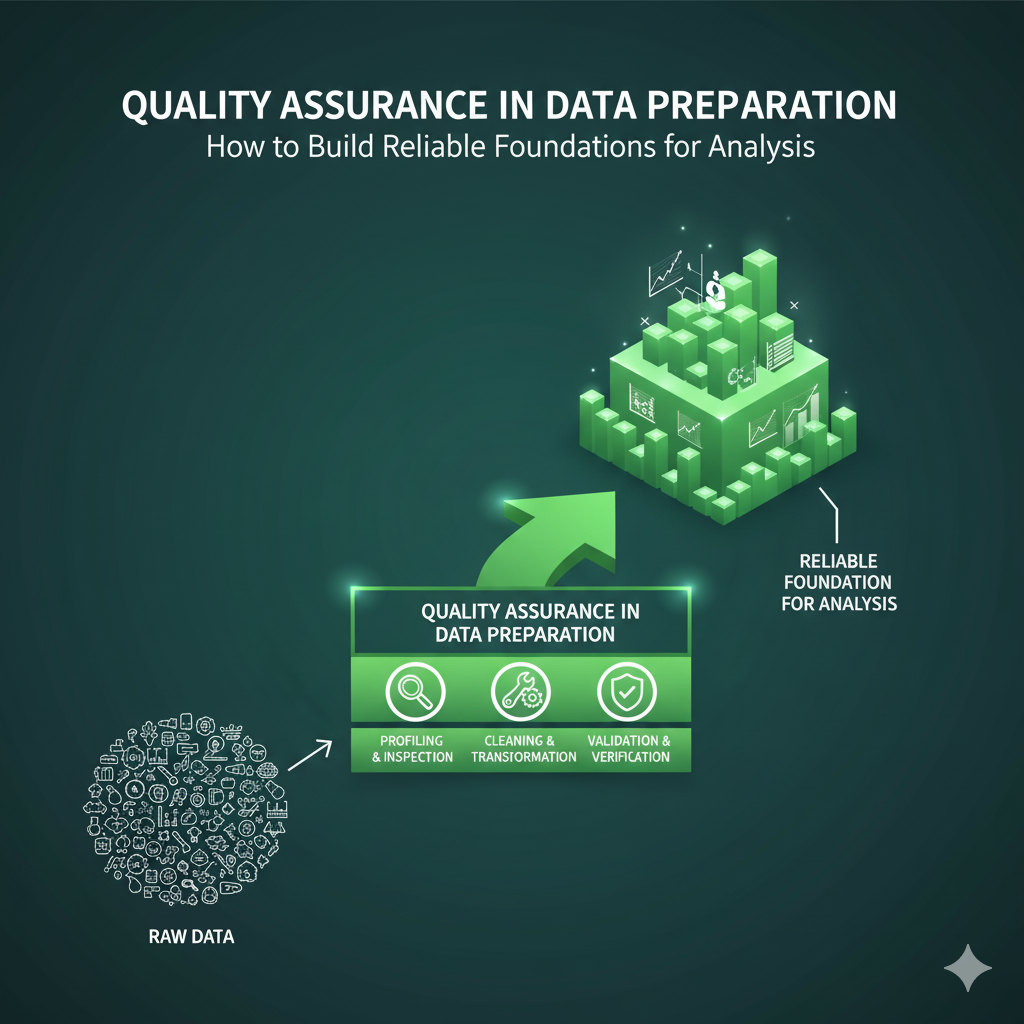

As organizations race to modernize their data capabilities, AI tools like ChatGPT are reshaping how teams interact with information. From generating SQL queries to explaining Python code, generative AI can accelerate analysis and insight creation across departments. Yet, while these tools offer tremendous potential, they also introduce new risks in data governance, privacy, and reliability that leaders cannot ignore.

At the Certified Data Intelligence Professionals Society (CDIPS), we believe that the responsible use of AI begins with awareness. Understanding both the opportunities and limitations of large language models (LLMs) like ChatGPT is essential to building a data-intelligent organization that values accuracy, ethics, and human judgment.

1. The Reality: Your Team Is Already Using ChatGPT

Since its release, ChatGPT has become one of the fastest-adopted technologies in history. Whether or not your organization has formalized policies, employees are likely experimenting with it to write scripts, generate reports, or troubleshoot analytics code.

This democratization of AI brings innovation—but also risk. Without proper guidance, employees may inadvertently share sensitive or proprietary data with external systems. That’s why the first step in any AI adoption plan should be awareness and governance.

2. The Hidden Risks of Generative AI

While ChatGPT can deliver quick insights, its use in data analysis carries several key risks leaders must mitigate:

-

Data Privacy: Free or public versions of AI tools are not private. Uploading internal datasets, client information, or database structures could expose your organization to data breaches or compliance violations.

-

Hallucination and Inaccuracy: LLMs can fabricate details, producing confident but false results. Relying on AI-generated numbers or code without verification could lead to costly errors or reputational damage.

-

Bias and Judgment Gaps: AI models lack human context and ethical reasoning. They may reproduce bias in data or generate insights that, while plausible, are misleading when applied in real-world contexts.

To address these issues, organizations need clear usage policies, AI literacy training, and data-handling protocols aligned with professional standards such as those upheld by CDIPS.

3. Building an Ethical AI Framework

Responsible adoption starts at the leadership level. Executives and data officers should define a governance framework that establishes:

-

Acceptable Use Guidelines – Specify which tools and use cases are authorized for business tasks.

-

Data Protection Protocols – Prohibit the entry of confidential or personally identifiable data into public LLMs.

-

Verification Requirements – Require human review of AI-assisted outputs before decisions are made or results are published.

-

Continuous Training – Invest in AI literacy programs for employees, emphasizing critical thinking, data ethics, and evaluation of automated insights.

A certified, data-intelligent workforce understands that AI should assist—not replace—human analysis and judgment.

4. Practical and Safe Use Cases in Data Analysis

Used wisely, ChatGPT can enhance productivity across analytics workflows. Common, low-risk use cases include:

-

Explaining code or formulas (e.g., Python, SQL, or Excel functions).

-

Troubleshooting syntax errors or logic issues in scripts.

-

Generating sample datasets for testing and training purposes.

-

Improving communication by converting technical outputs into business-friendly narratives.

-

Brainstorming data visualization approaches for clearer storytelling.

However, ChatGPT should never be used for interpreting sensitive datasets, automating compliance decisions, or drawing final analytical conclusions without human validation.

5. The Art of Prompt Engineering

Getting value from ChatGPT depends on how questions are framed. “Prompt engineering” is the emerging discipline of crafting precise, contextual inputs that guide the AI toward useful results.

Best practices include:

-

Be Specific: Clearly state the task, data type, and desired outcome.

-

Provide Context: Explain what problem you’re solving and for whom.

-

Assign Roles: Tell the AI to act as a “data analyst,” “Python tutor,” or “BI specialist.”

-

Define Tone and Depth: Specify whether you need a brief summary or a technical breakdown.

Encouraging employees to experiment and iterate on prompts can improve both results and understanding of the model’s behavior—while keeping critical thinking front and center.

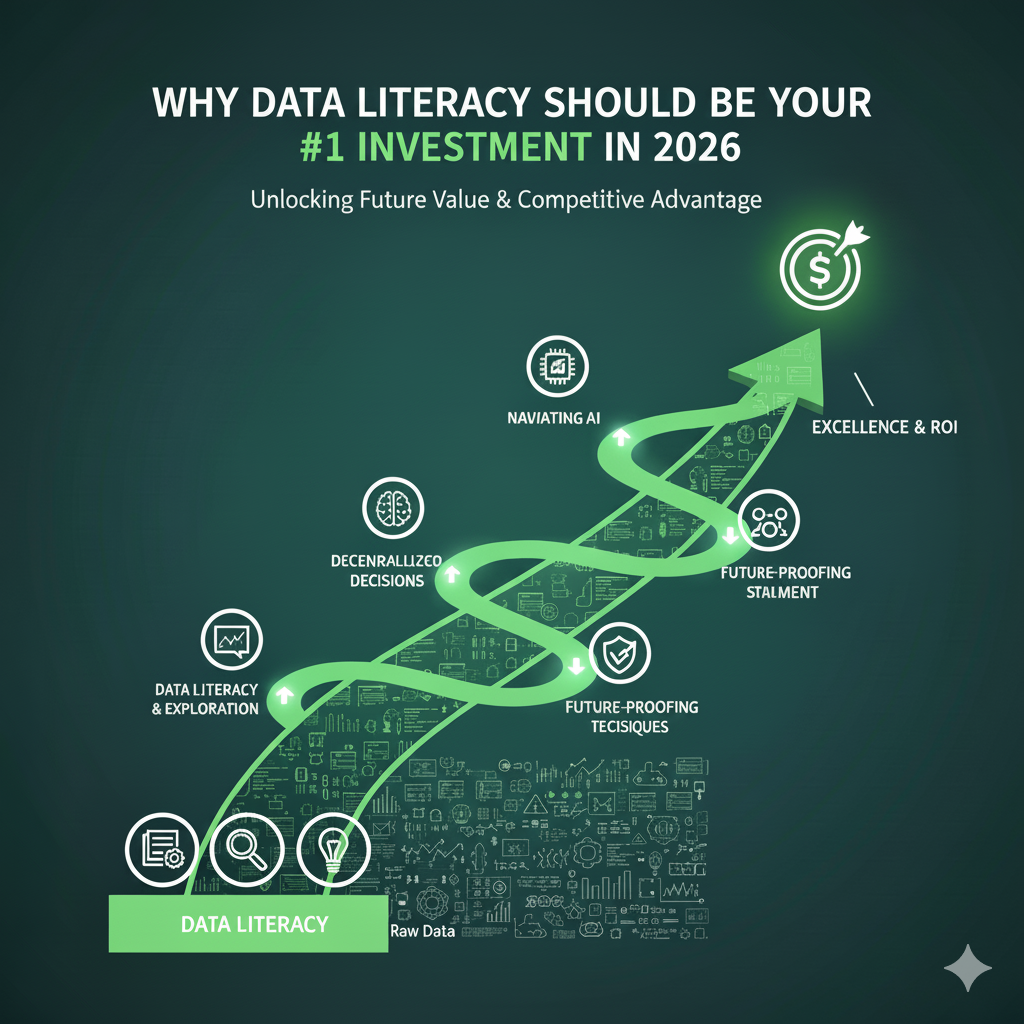

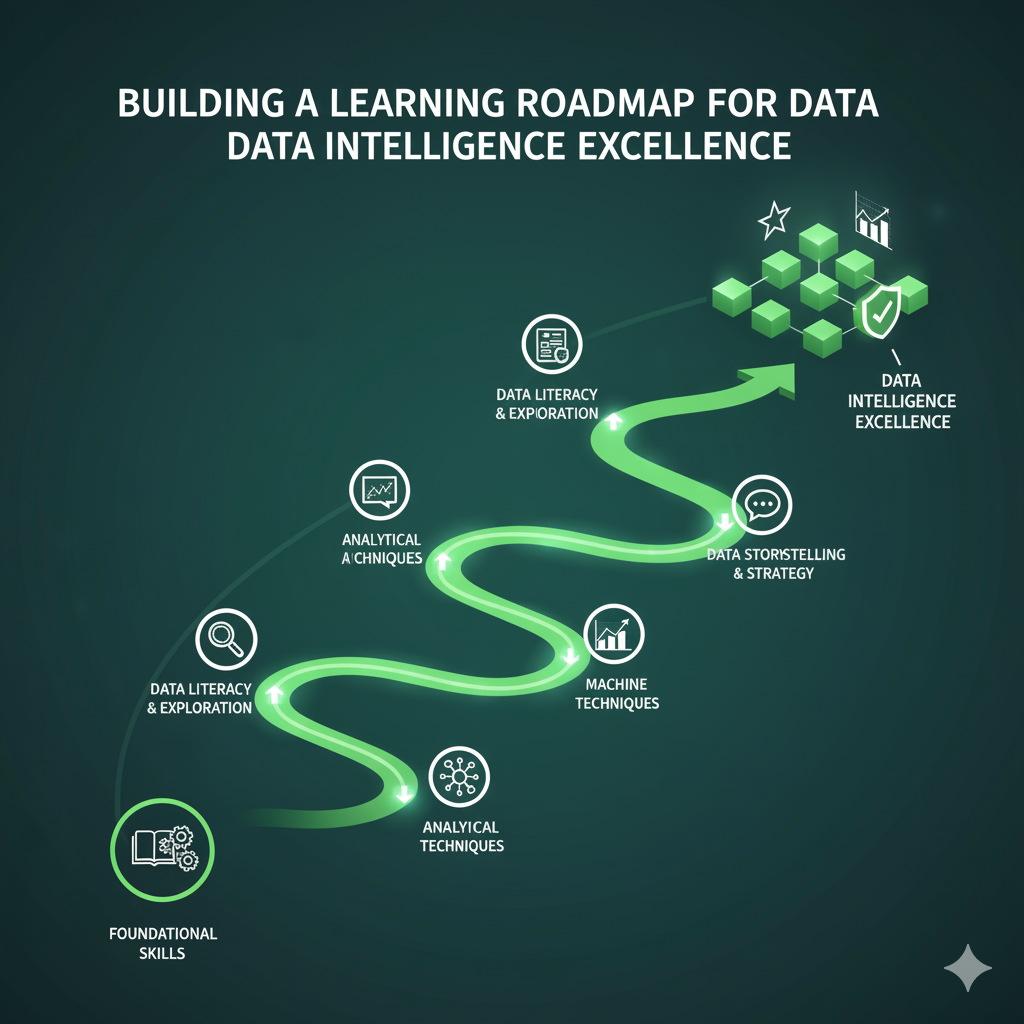

6. Preparing for the Future of AI-Driven Data Work

As LLMs evolve, their analytical capabilities will expand. Specialized models are already emerging for finance, healthcare, logistics, and governance, offering more targeted and secure solutions.

Forward-thinking organizations should begin developing AI competency frameworks to identify skill gaps, integrate ethical guidelines, and prepare teams for these new tools. Certification bodies like CDIPS play a crucial role in shaping these standards—ensuring that professionals who work with data and AI uphold integrity, transparency, and accountability.

7. Key Takeaways for Data Leaders

-

Adoption is inevitable. Employees and competitors are already leveraging AI for data-related tasks.

-

Governance is essential. Implement data protection and review processes before allowing organization-wide use.

-

Training drives impact. Equip your teams with the skills to use AI effectively and responsibly.

-

Human oversight remains non-negotiable. AI enhances productivity—but judgment, context, and ethics remain human strengths.

The future of data work will be defined not by how much AI organizations adopt, but by how intelligently and responsibly they use it.

Responses