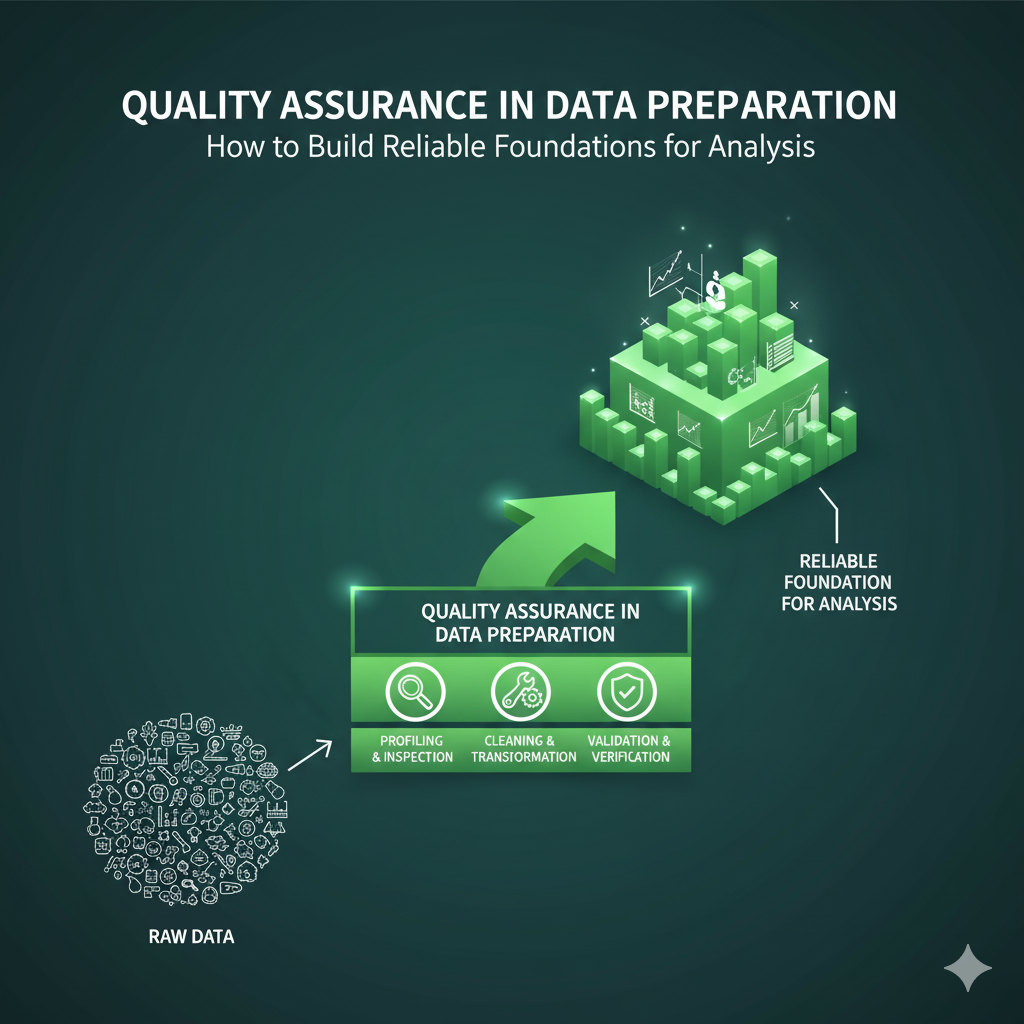

Quality Assurance in Data Preparation: How to Build Reliable Foundations for Analysis

Quality Assurance in Data Preparation: How to Build Reliable Foundations for Analysis

Every great data project starts long before the first chart is built or the first insight is shared. The real work begins in data preparation — the often-overlooked phase that can determine whether an organization’s analytics efforts succeed or fail.

At the Certified Data Intelligence Professionals Society (CDIPS), we view data preparation as a strategic investment in quality, trust, and ethical intelligence. Done well, it ensures that every dashboard, report, and model is grounded in reliable information. Done poorly, it leads to misleading insights and costly decisions.

The lesson is simple: clean, verified data equals credible outcomes.

1. The Value of Data Preparation

It’s estimated that data professionals spend up to 70% of their time preparing data for analysis. While this may seem inefficient, that time directly translates into the quality of insights produced. Poorly prepared data leads to poor results — a principle often summarized by the classic phrase, “garbage in, garbage out.”

Imagine presenting a client report claiming a 100% increase in marketing spend, only to be told the numbers are impossible. A quick review reveals the culprit: duplicate transaction records that inflated the totals. Trust evaporates instantly — not because of the model or the visuals, but because of inadequate data QA.

Data preparation is the safeguard against these mistakes. It ensures accuracy, consistency, and reliability before analysis begins.

2. The Core Steps of Data Preparation

While data preparation includes several phases — such as data profiling and data engineering (ETL) — the first and most critical stage is data quality assurance (QA). QA focuses on identifying and resolving issues that compromise the validity of your dataset.

Here are the five most common QA issues — and how to fix them.

Issue 1: Incorrect Data Types

When a dataset contains mismatched or inaccurate data types (like numeric phone numbers or postal codes missing leading zeros), analytical tools can misread or reject the data.

Solution:

-

Reformat or re-encode fields to ensure consistency.

-

Standardize numeric, text, and categorical variables across systems.

-

Automate data type validation in your ETL or QA pipeline.

Accurate data types reduce processing errors and improve the reliability of calculations and visualizations.

Issue 2: Duplicate Records

Duplicate data is one of the most common causes of inflated metrics and reporting errors. These redundancies can bias results or distort performance indicators.

Solution:

-

Identify and remove duplicate entries using unique identifiers.

-

Keep duplicates only when they serve a specific analytic purpose (e.g., upsampling for machine learning).

-

Implement deduplication checks during ingestion.

Consistent duplicate management ensures that every record represents a single, verified source of truth.

Issue 3: Inconsistent Categorical Values

When categories are entered inconsistently — like mixing full state names (“New York”) with abbreviations (“NY”) — analysis becomes fragmented and unreliable.

Solution:

-

Define and document a standard naming convention (e.g., always use abbreviations).

-

Use “find and replace” or mapping tables to enforce standardization.

-

Maintain both raw and standardized versions for traceability.

A consistent taxonomy enhances interpretability and supports cross-departmental reporting integrity.

Issue 4: Empty or Missing Values

Empty values are among the most complex QA challenges because their treatment depends heavily on context. Simply replacing them with zeros or averages can skew results if done blindly.

Solution:

-

Keep them when blanks are valid (e.g., intentionally missing entries).

-

Impute them using logical or statistical estimates when the context supports it.

-

Remove them when the dataset is large enough and the blanks introduce noise.

Documenting why and how you treat missing data is crucial for reproducibility and auditability — key principles of CDIPS professional standards.

Issue 5: Outlier Values

Outliers can heavily distort averages and predictive models. A single unrealistic entry — like an income value of $2 million in a modest household dataset — can make an entire analysis misleading.

Solution:

-

Keep valid outliers if they reflect real-world events.

-

Impute or cap values when they are extreme but explainable.

-

Remove impossible values.

-

Transform them (e.g., via log or scale adjustments) to reduce skew.

Careful handling of outliers preserves both data integrity and analytical fairness.

3. Aligning QA With Business Goals

Data QA isn’t about perfection — it’s about fitness for purpose. Every QA decision should align with the business problem being solved. Removing duplicates, imputing values, or transforming outliers should all serve one goal: ensuring the dataset is accurate and relevant for the intended analysis.

This alignment also fosters cross-functional trust. When data professionals can explain how they validated and prepared data, decision-makers gain confidence in the insights that follow.

4. Building a Culture of Data Quality

Data QA shouldn’t be the responsibility of analysts alone. It requires a culture of quality and accountability across departments — from data collection to reporting.

Organizations can build this culture by:

-

Embedding QA checks into data pipelines.

-

Providing training on data governance and validation principles.

-

Recognizing the value of certified data professionals who uphold standards of ethical data practice.

At CDIPS, we champion this professionalism through certification pathways that ensure practitioners demonstrate mastery in data cleaning, validation, and quality assurance — not just technical fluency, but analytical integrity.

5. The Bottom Line

Data QA is not a tedious prerequisite; it’s a strategic enabler of trust. When analysts commit to structured data preparation, they don’t just improve accuracy — they enhance credibility, decision speed, and organizational confidence.

The next time you prepare data for a project, remember: every minute spent on QA is an investment in clarity, consistency, and professional excellence.

Key Takeaways

-

Data QA forms the foundation of credible analytics.

-

Address common QA issues — from duplicates to outliers — with structured, documented methods.

-

Align data preparation with business goals and ethical standards.

-

Certification through CDIPS reinforces professional accountability in data quality management.

Responses